Use A/B split tests to create effective campaign messagingUpdated a month ago

You can use A/B testing with your Convert campaigns to compare how different versions of your campaigns perform with shoppers who visit your store.

A/B testing allows you to take a data-driven approach to connecting with your customers. When you set up and run an A/B test, you can monitor how different campaign messages affect key metrics like engagement and conversion rates.

Regular testing can help you identify the most effective messaging strategy and continually fine-tune your campaigns to reach your goals — whether you want to increase your average order value, add more shoppers to your newsletter, or highlight specific products.

Requirements

- You must have an active Convert subscription

- You must have Admin permissions to create A/B Tests

Create a new A/B test

When you create a new A/B test, your original campaign automatically becomes the “control variant”, keeping all your current settings, audience triggers and messaging.

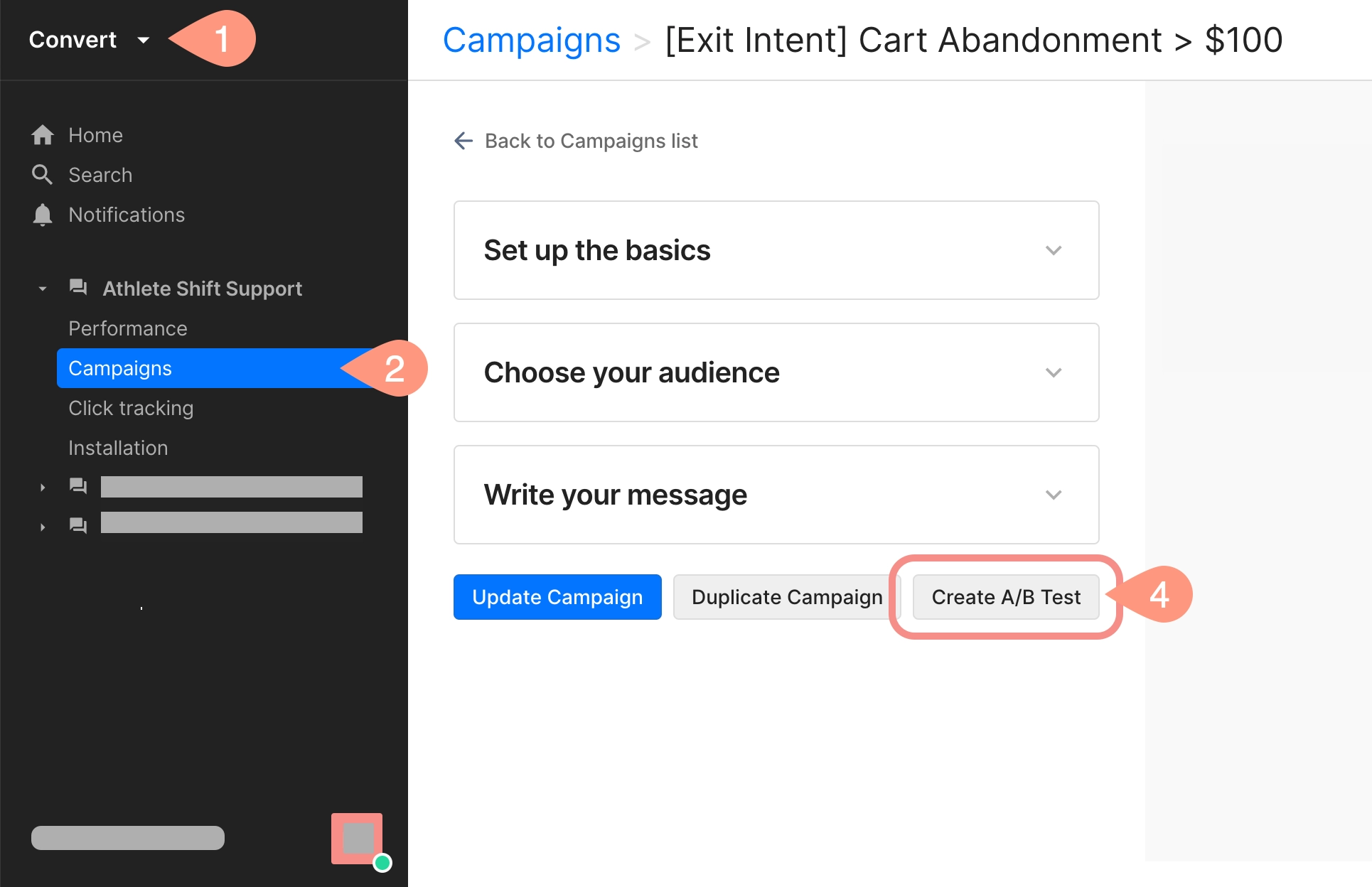

- Go to the Convert menu

- Select your store from the sidebar, then select Campaigns

- Select a campaign from the list or create a new campaign

- Scroll down and select Create A/B Test

Setting up your test variants

After you create a new A/B test, you’ll want to set up variants to compare how your campaign performs with different messaging.

You can create up to two additional variants to compare against the original campaign (your control variant). Your new variants always have the same settings as the original campaign, like your audience triggers and conditions.

Make changes to your variant campaign’s messaging to measure its effectiveness with shoppers who visit your store.

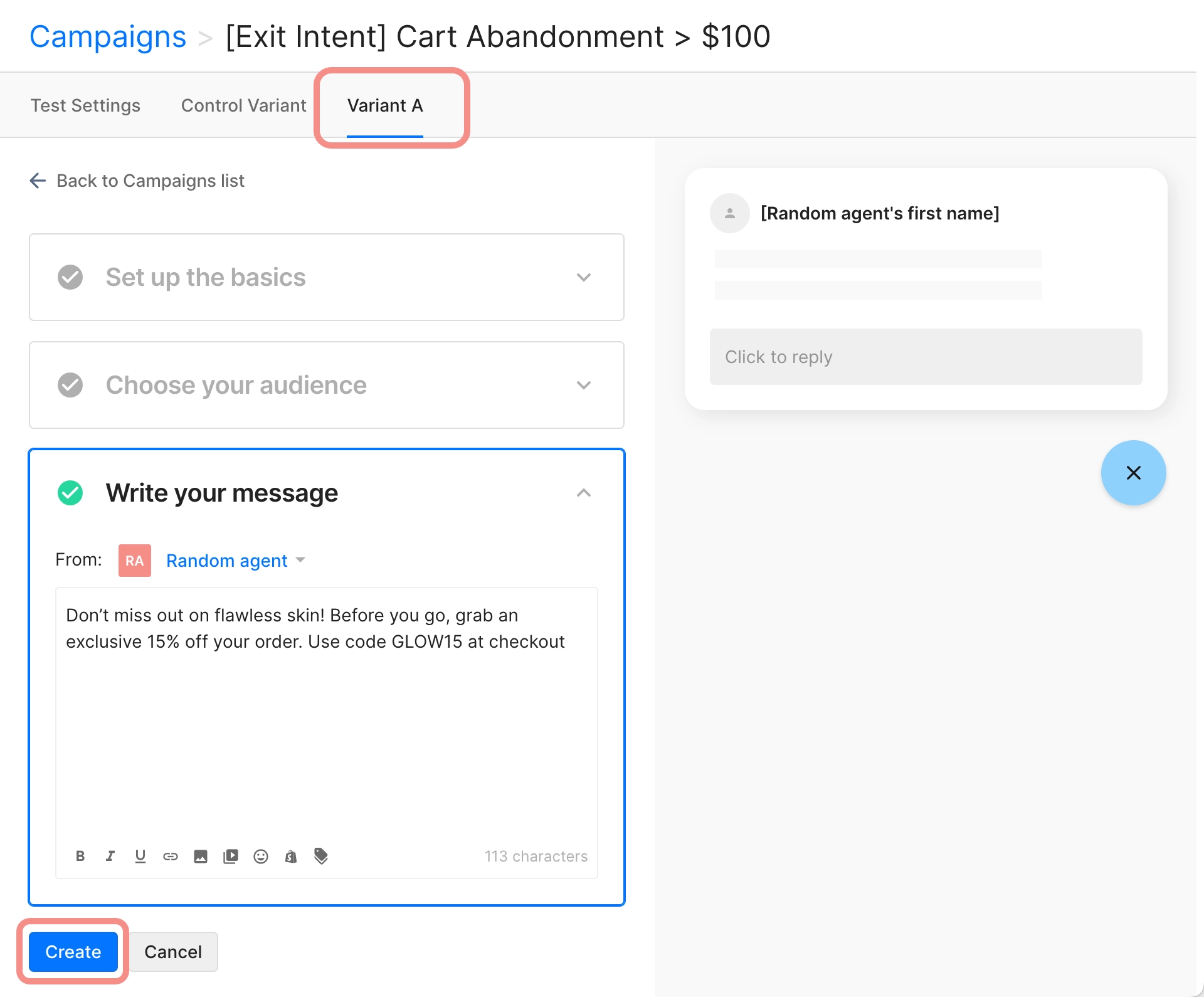

- Go to the Convert menu

- Select your store from the sidebar, then select Campaigns

- Select a campaign with an A/B test

- Select the Variant A tab to write an alternate campaign message

- To add a another variant go to Test Settings, then select Add Variant

- Select Create to finish

Running your A/B test

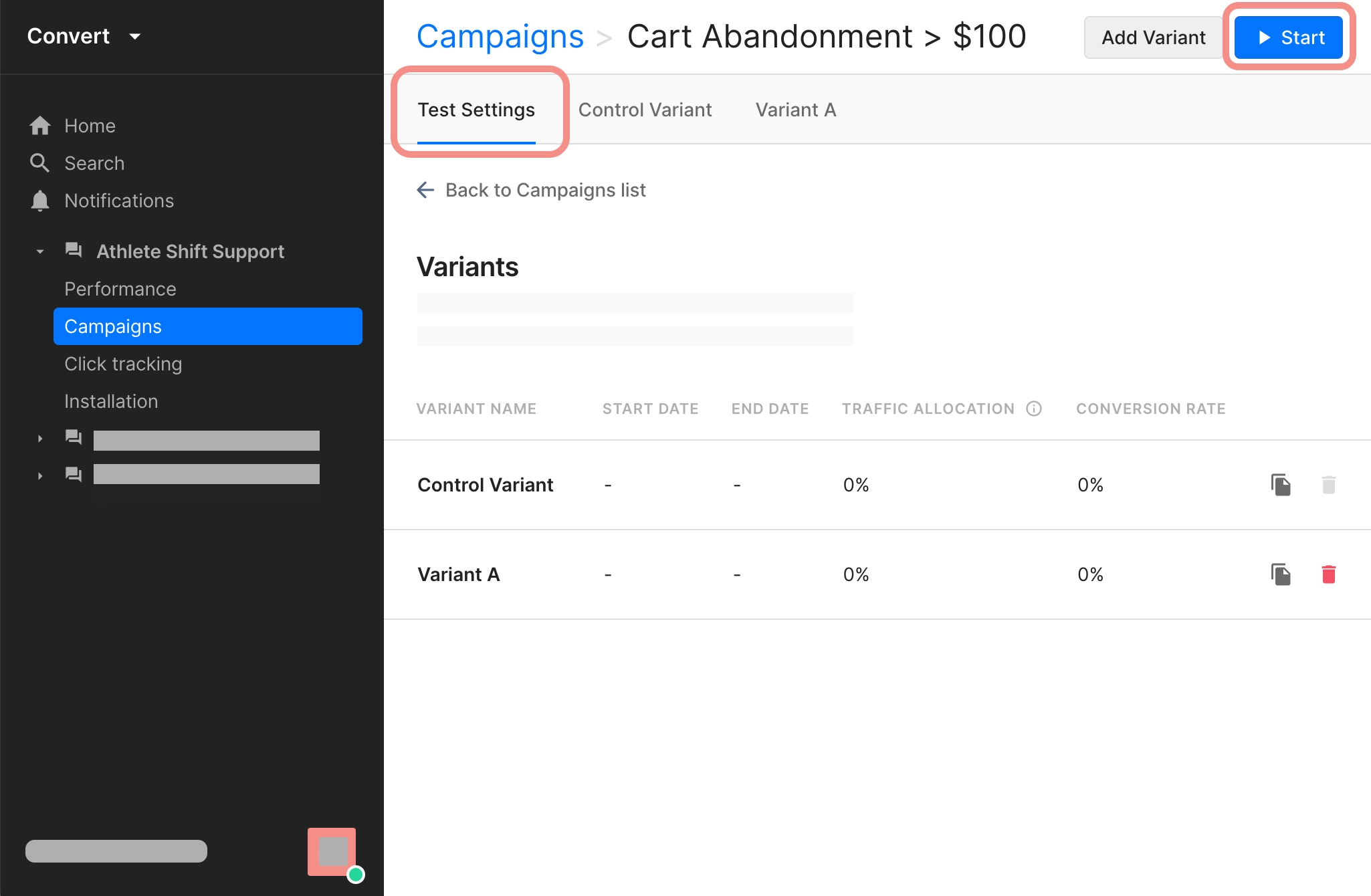

When you’re ready, you can run your A/B split test. Once started, the test will evenly distribute traffic to your store across your variants to measure their performance.

While the test is ongoing, you cannot edit or add new variants. If you need to make changes, return to the Test Settings page for your campaign to pause the test and then edit your variants.

- From the Convert menu, select a campaign for your store with an A/B test

- You can use the A/B Test filter to quickly find existing campaigns with split tests

- Select the Test Settings tab

- Select Start to begin the test

Review your A/B test performance and select a winner

While your A/B test is running, you can monitor the ongoing results in the Performance dashboard. Pay attention to metrics like clickthrough rate (CRT) and conversion to determine which variant performs best.

When you’re ready, select Stop Test to end the A/B split test. You’ll be prompted to select a winning variant.

- From the Convert menu, select the campaign that you’re testing from the sidebar

- Select the Test Settings tab, then click on Stop Test

- Click on New Campaign from Winner to launch the winning variant as a new, standalone campaign

Best practices for A/B testing

- Test a single variable → for clear results, focus on testing one change to your campaigns at a time. This way you can attribute changes in performance to the specific variable that you’re testing.

- Define success metrics → clearly outline your goals for the test. Whether you want to improve clickthrough or increase the number of orders that a campaign creates, clear metrics will help you measure success accurately.

- Allow enough time → we recommend running an A/B test for at least two weeks to obtain meaningful results. Stopping a test too early may lead to inaccurate conclusions.

- Account for external factors → consider external influences like seasonal trends, current promotions or shifts in customer behavior that might influence the results of your A/B test.